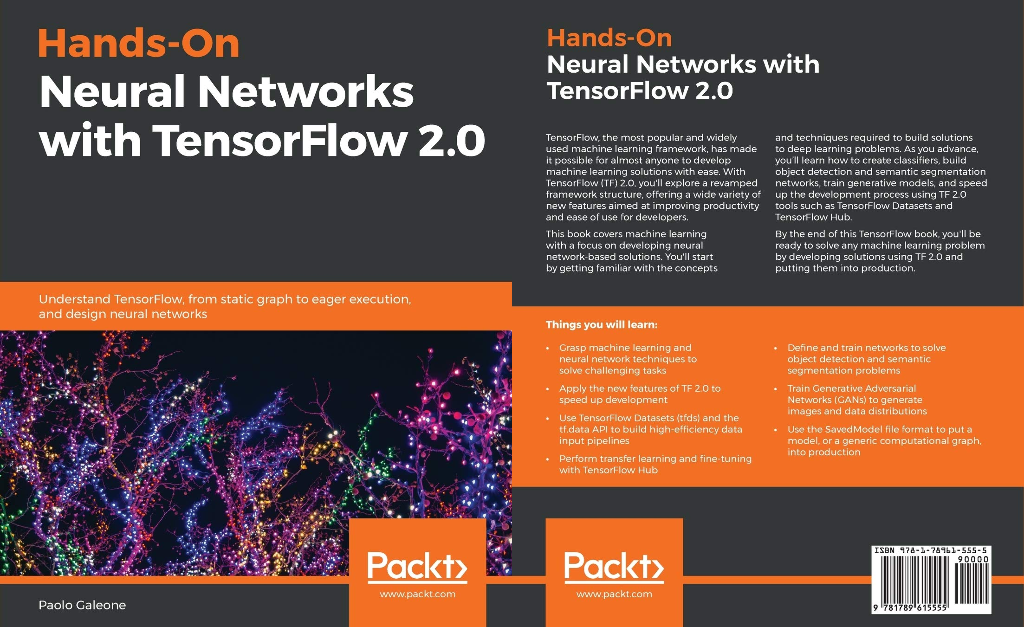

I’m really happy to announce that the first edition of Hands-On Neural Networks with TensorFlow 2.0 is out!

The book is divided into 3 parts:

- Machine Learning and Neural Networks fundamentals: an explanation in simple words of the theory that powers many machine learning algorithms, with a focus on neural networks

- TensorFlow fundamentals: the TensorFlow 1.x architecture, the new TensorFlow 2.0 architecture, its new features and ecosystem, and how to transition from TensorFlow 1.x to 2.0

- Neural Networks applications: how to solve problems developing neural networks-based solutions and, at the same time, learn how the new features of the TensorFlow 2.0 ecosystem can help us in every step of the machine learning pipeline; production included.

The book is available on Amazon on three different stores and is available both in paperback and Kindle version!

Do not hesitate to leave feedback on Amazon or send me an email!